Comparison of OCR tools: how to choose the best tool for your project

Fabian Gringel

Optical character recognition (short: OCR) is the task of automatically extracting text from images (coming as typical image formats such as PNG or JPG, but possibly also as a PDF file). Nowadays, there are a variety of OCR software tools and services for text recognition which are easy to use and make this task a no-brainer. In this blog post, I will compare four of the most popular tools:

Tesseract OCR

ABBYY FineReader

Google Cloud Vision

Amazon Textract

I will show how to use them and assess their strengths and weaknesses based on their performance on a number of tasks. After reading this article you will be able to choose and apply an OCR tool suiting the needs of your project.

Note that we restrict our focus on OCR for document images only, as opposed to any images containing text incidentally.

Now let’s have a look at the document images we will use to assess the OCR engines.

Our test images

Document images come in different shapes and qualities. Sometimes they are scanned, other times they are captured by handheld devices. Apart from printed text they might also contain handwriting and structural elements such as boxes and tables. Thus the ideal OCR tool should

recognize well-scanned text reliably,

be robust towards bad image quality and handwriting,

output information on the formatting and structure of the document.

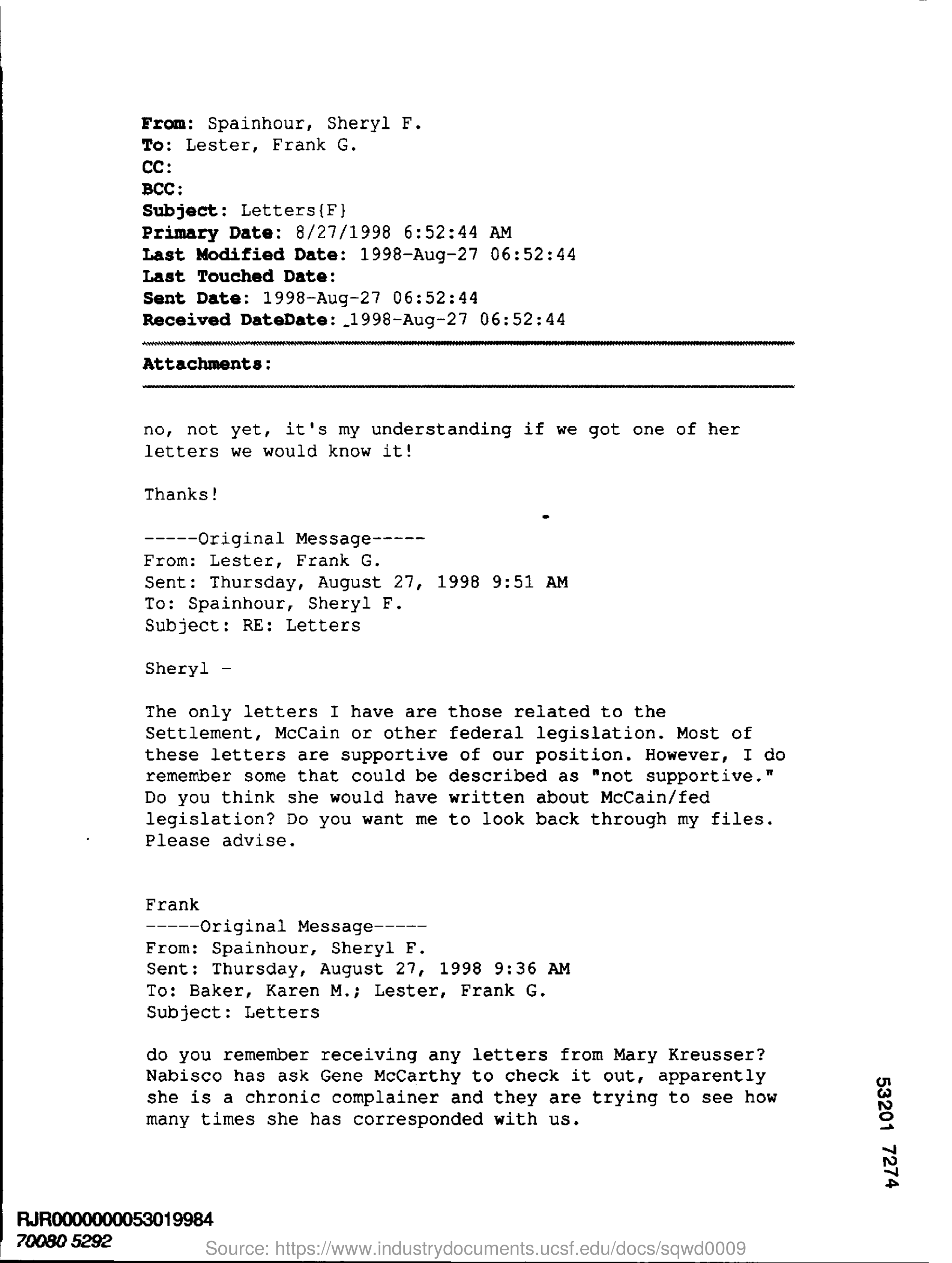

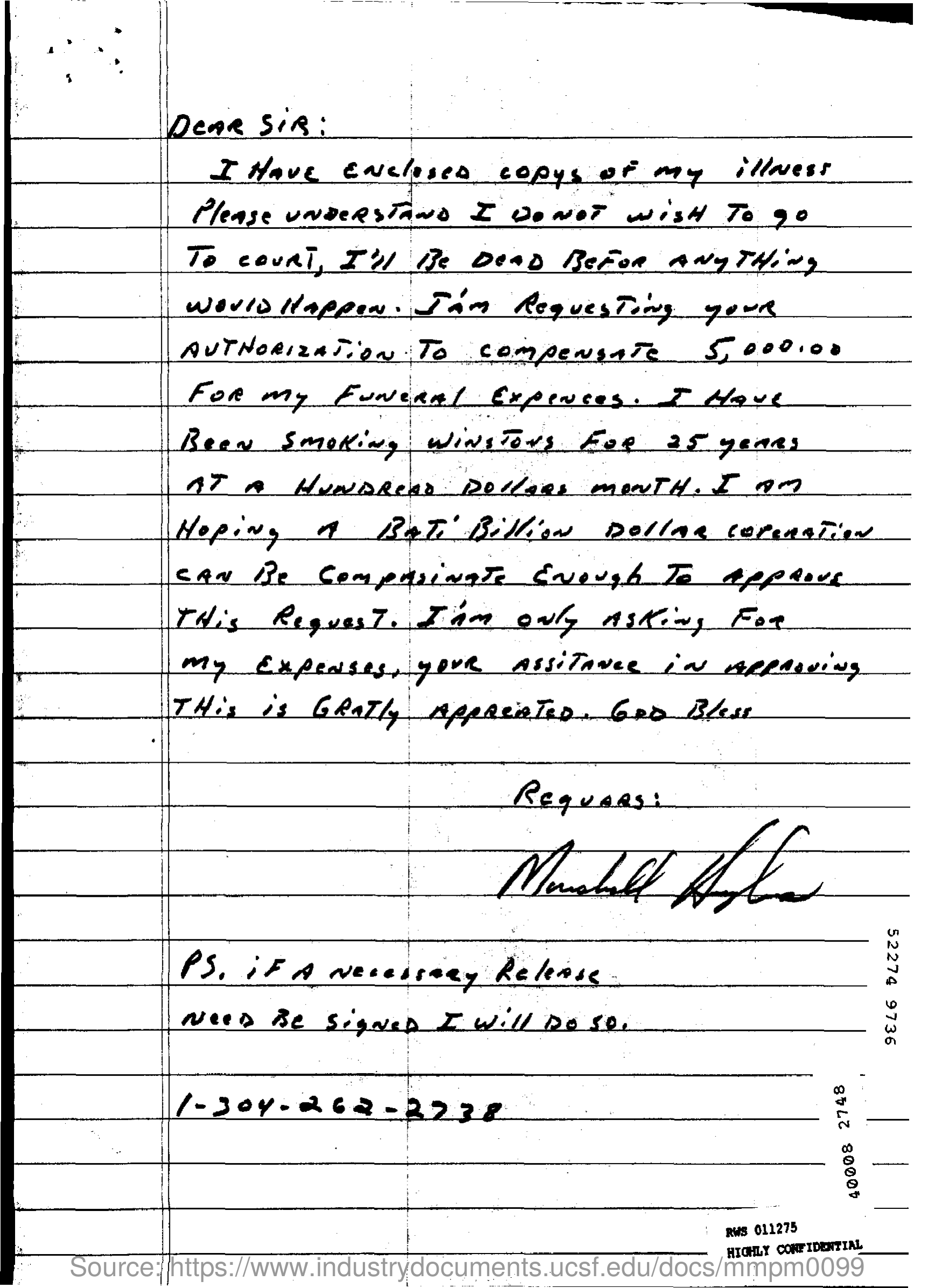

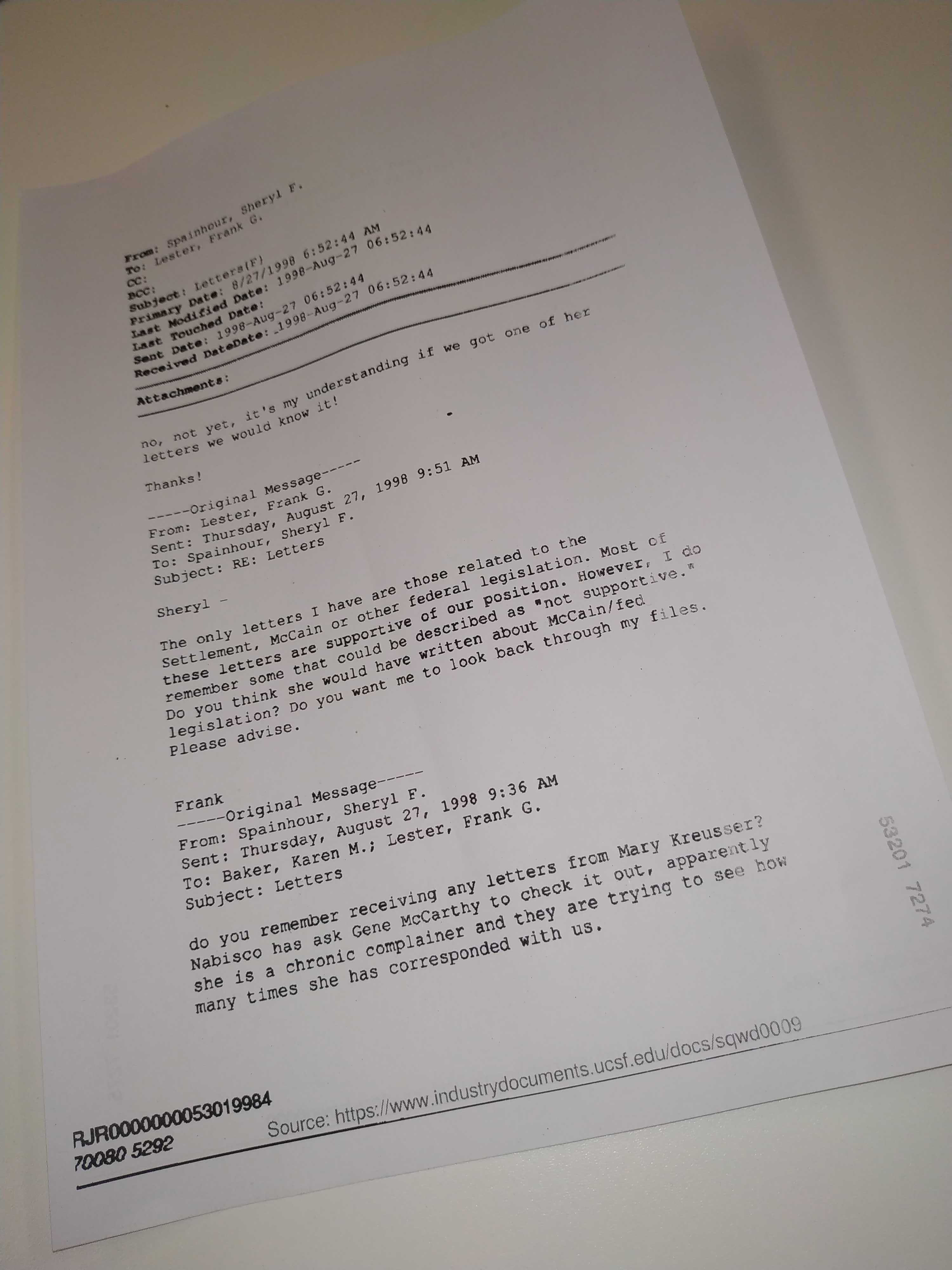

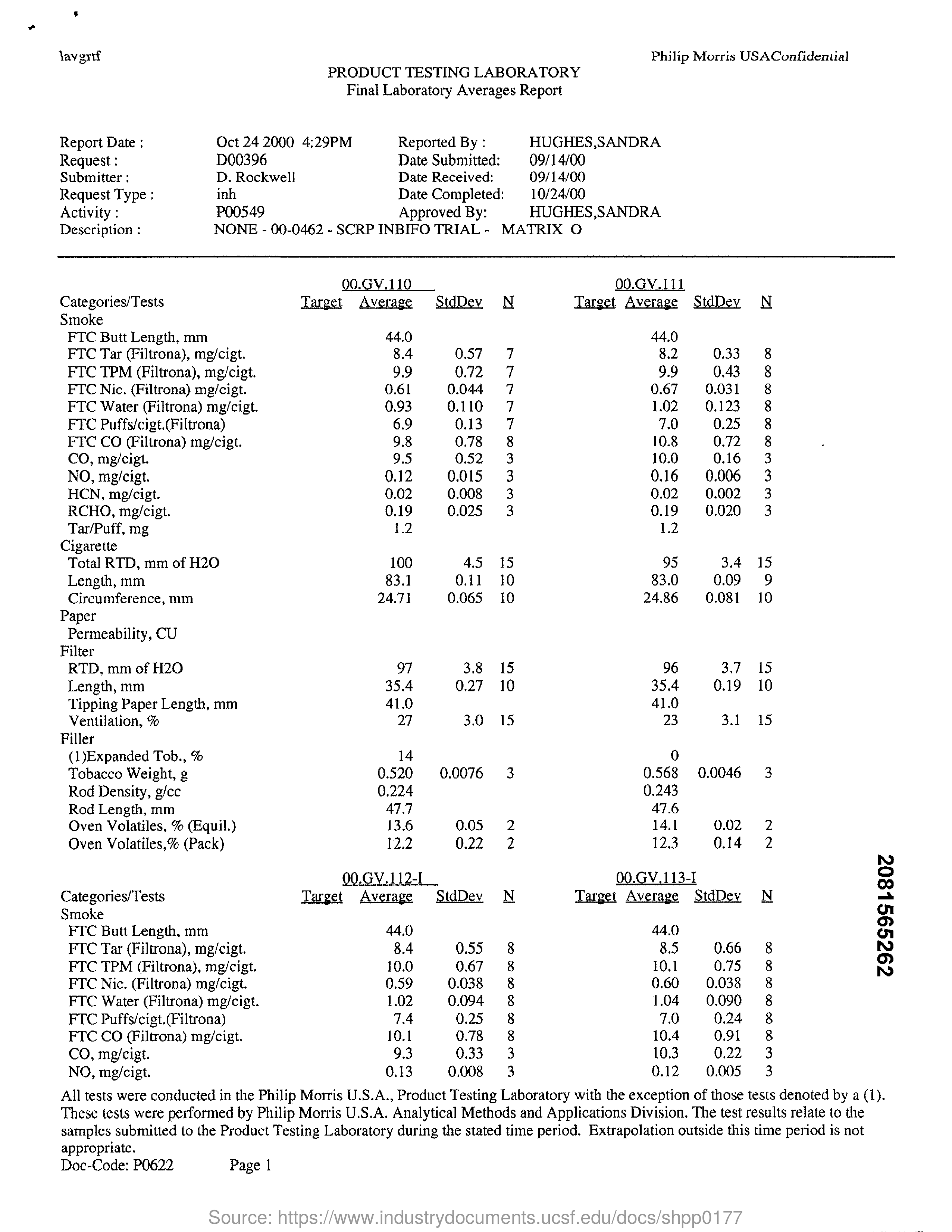

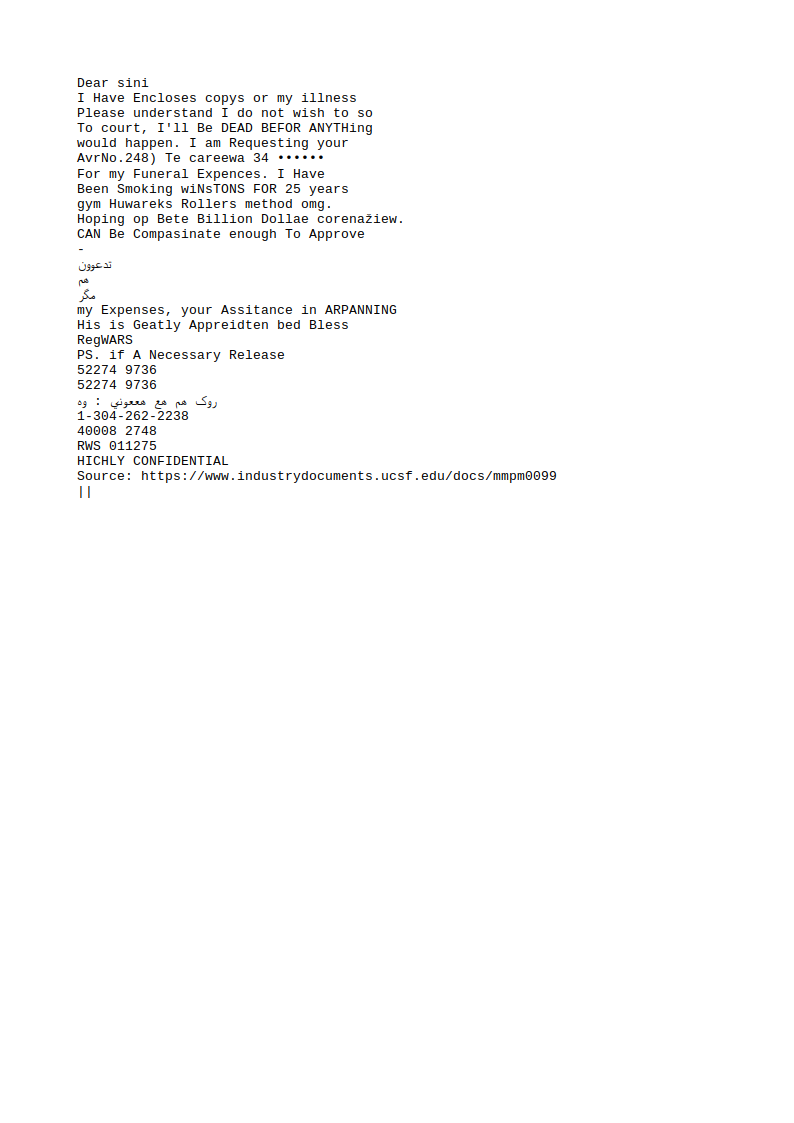

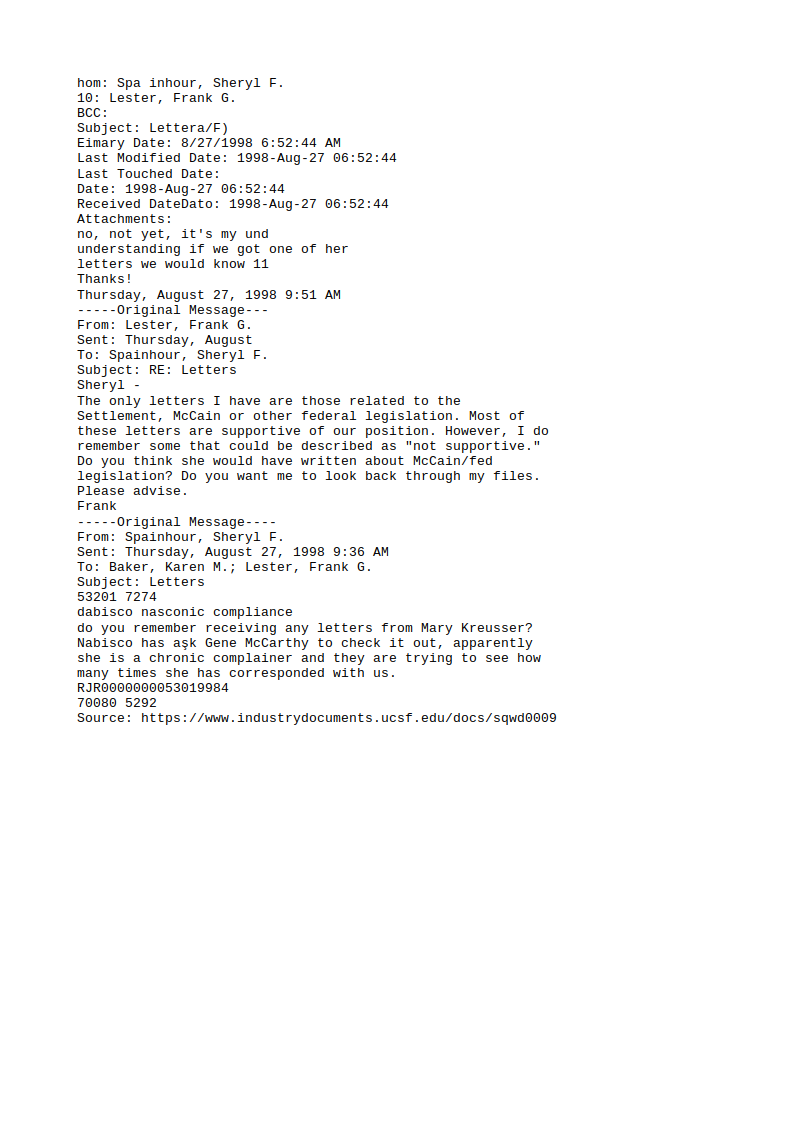

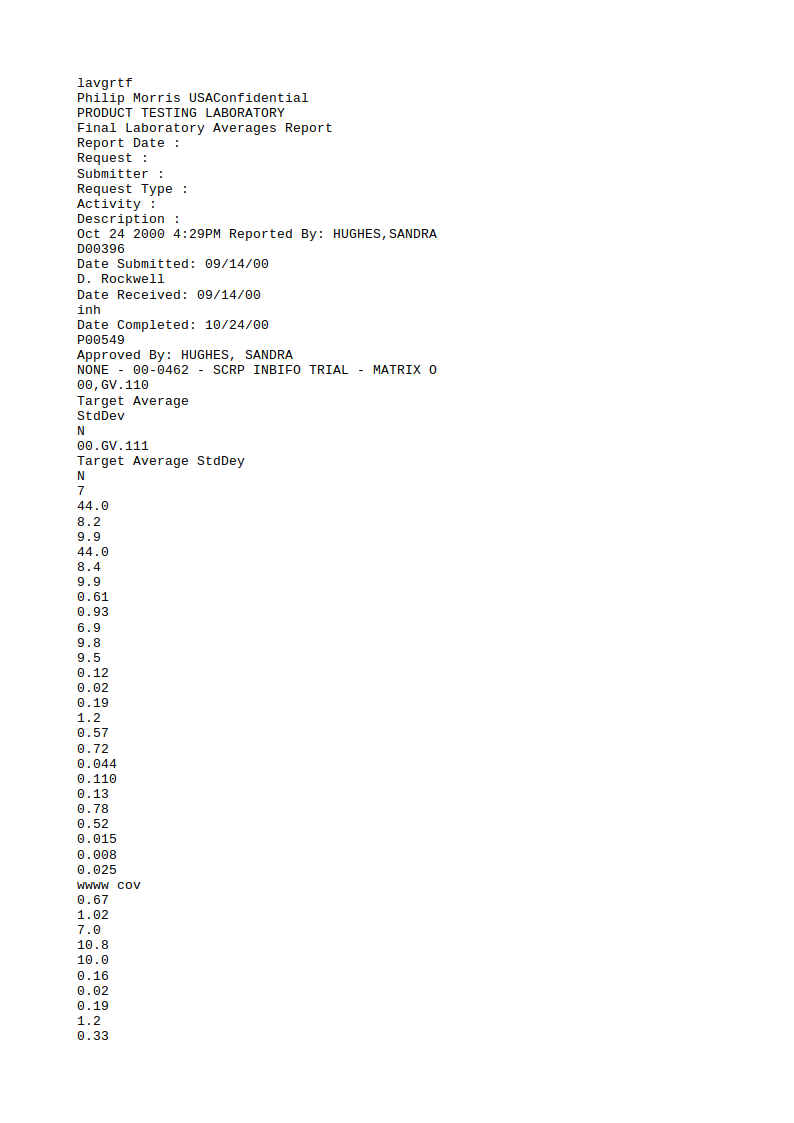

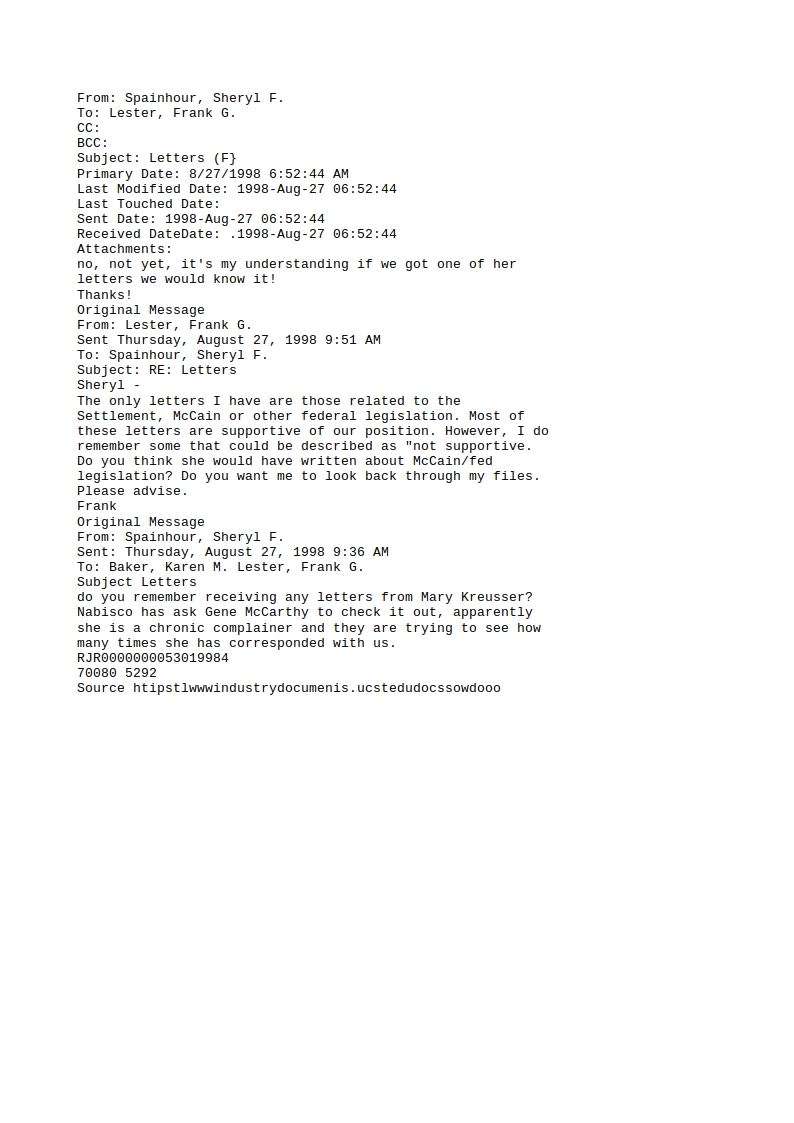

With these prerequisites in mind, we will test the OCR tools on the following four images:

All images come from a large corpus of Tobacco industry documents. The third one was printed and then captured by a smartphone, introducing typical noise.

First we will examine how Tesseract OCR fares with respect to these tasks.

Tesseract OCR

The best thing about Tesseract is that it is free and easy to use. Basically it is a command line tool, but there is also a Python wrapper called pytesseract and the GUI frontend gImageReader, so you can choose the one that best fits your purposes.

Using the command line tool is as easy as

tesseract imagename outputbase [outputformat]If we don’t specify an output format, the default is a text file containing the recognized characters. Alternatively, pdf will output a searchable pdf, and hocr and alto XML files containing additional information like character positions (in the XML standard which goes by the same name, respectively). See here for more optional arguments.

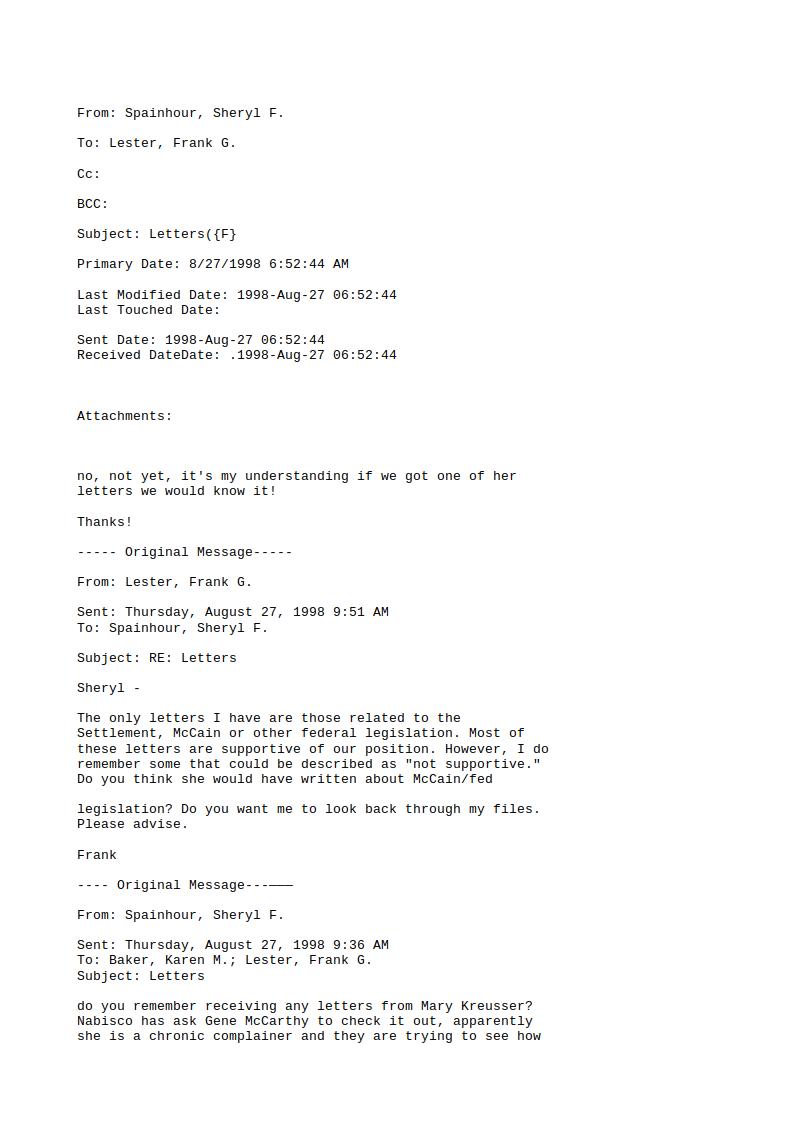

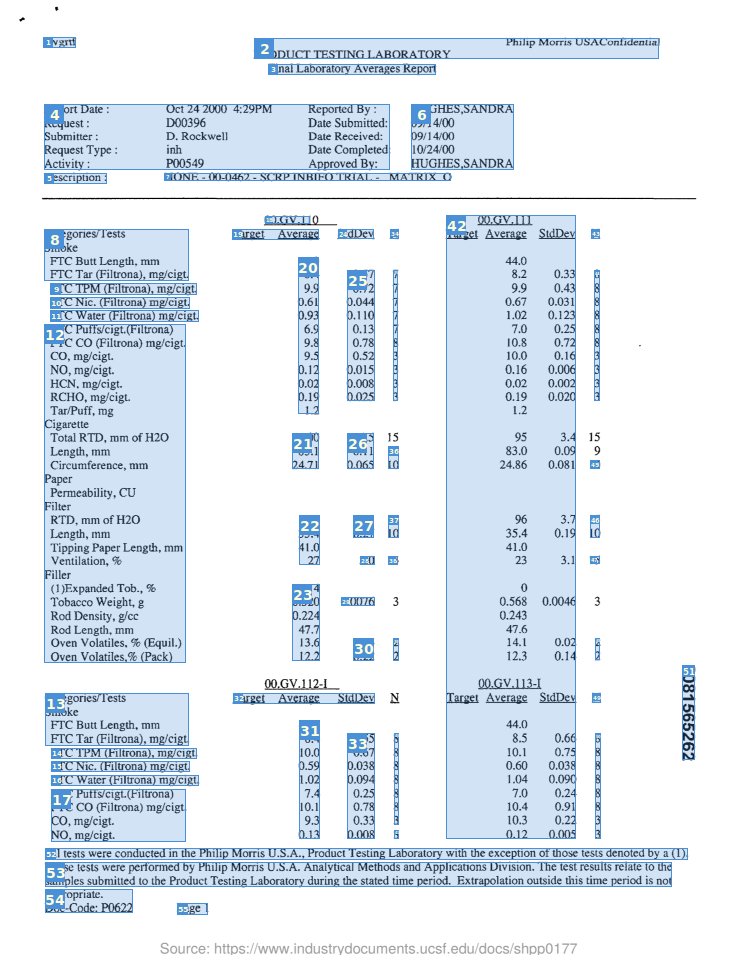

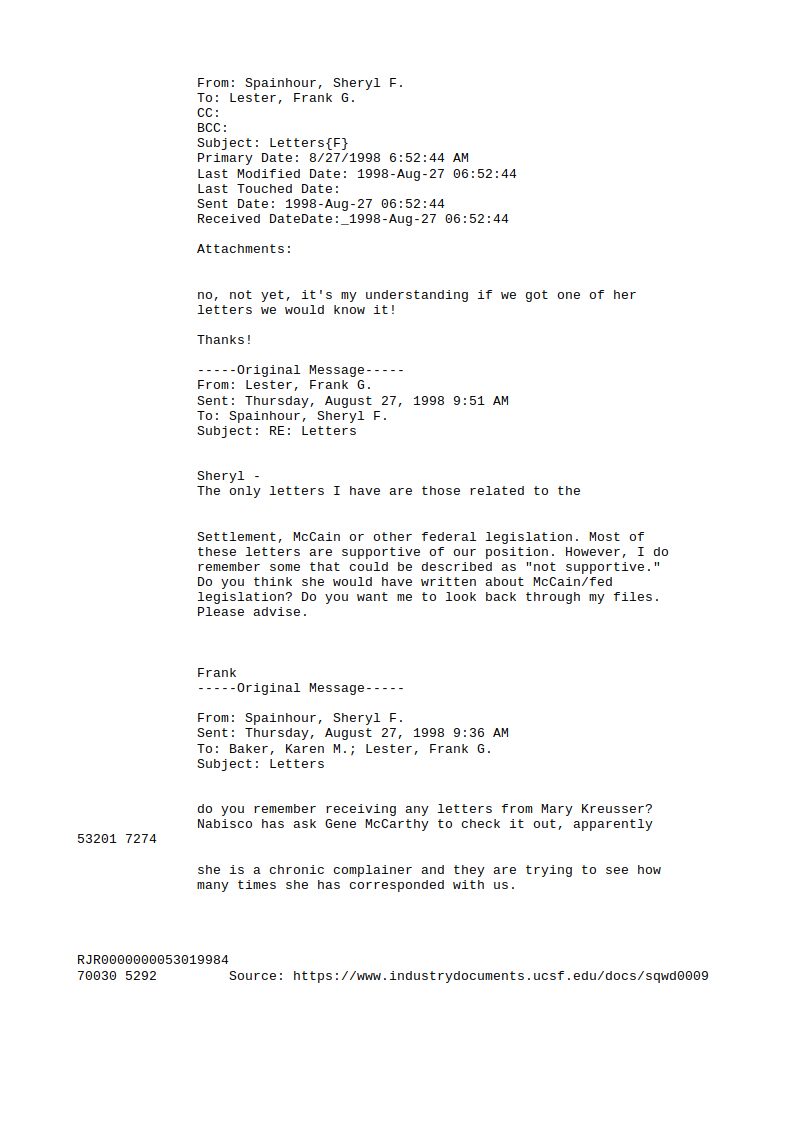

Here is what Tesseract finds in our test images:

As you'll notice, Tesseract OCR recognizes the text in the well-scanned email pretty well. However, when it comes to the handwritten letter and the smartphone captured document, either nonsense or literally nothing is outputted.

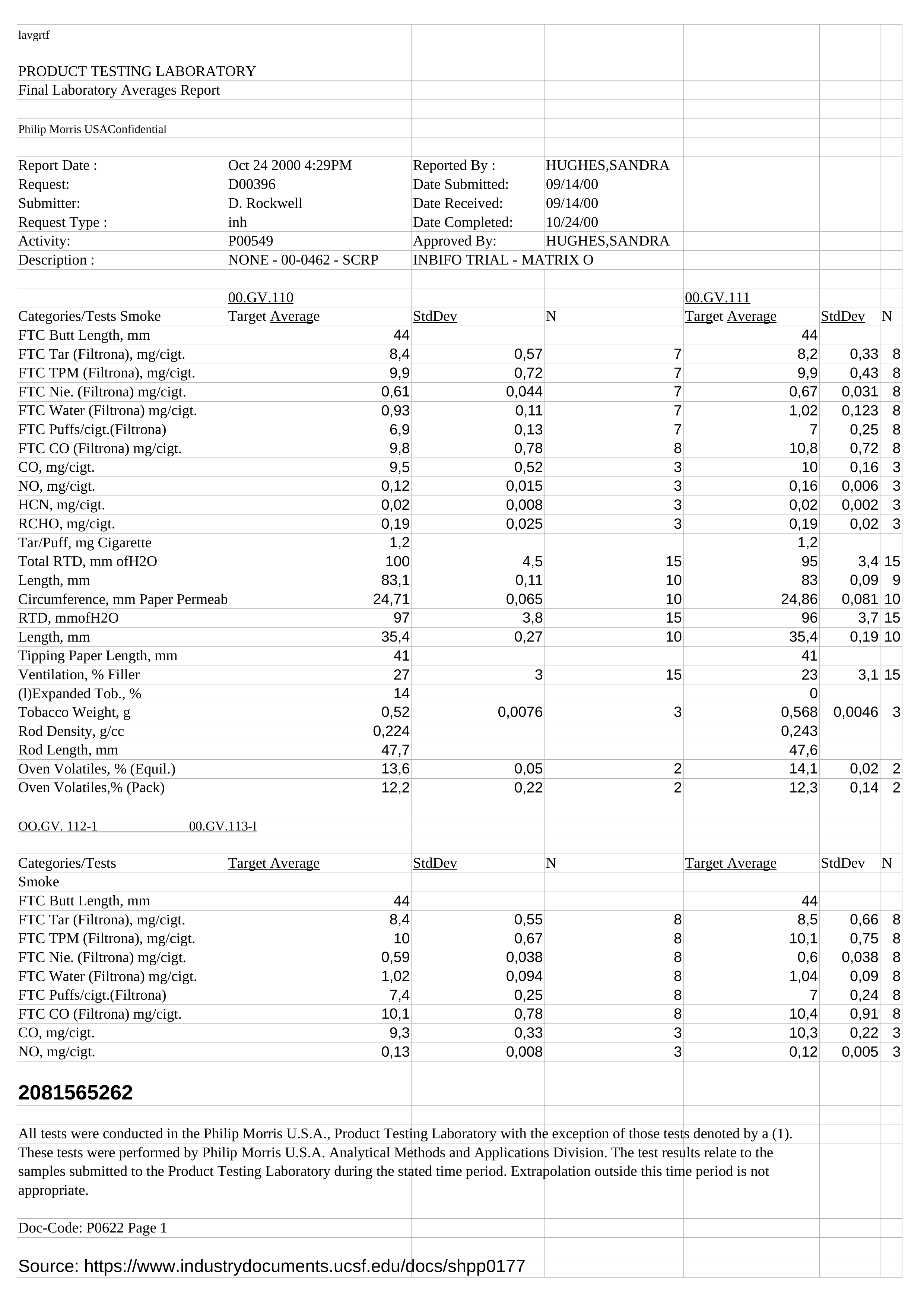

For the output from the table image I used gImageReader, the GUI frontend mentioned above. It turns out that Tesseract outputs bounding boxes for areas of the image that contain text, but that doesn't even get close to proper table extraction. Of course you can process Tesseract's output by your own table extraction tool. Our blog posts about applying OCR to technical drawings and extracting dates from letters give an idea how.

ABBYY FineReader

ABBYY offers a range of OCR-related products. I'm going to use the ABBYY Cloud OCR SDK API. This cloud service uses the ABBYY FineReader OCR engine, which can also be installed locally. Unlike Tesseract, ABBYY Cloud OCR is not free (pricing).

If you want to learn how to use the API, you'll find everything you need to know in these quick start guides.

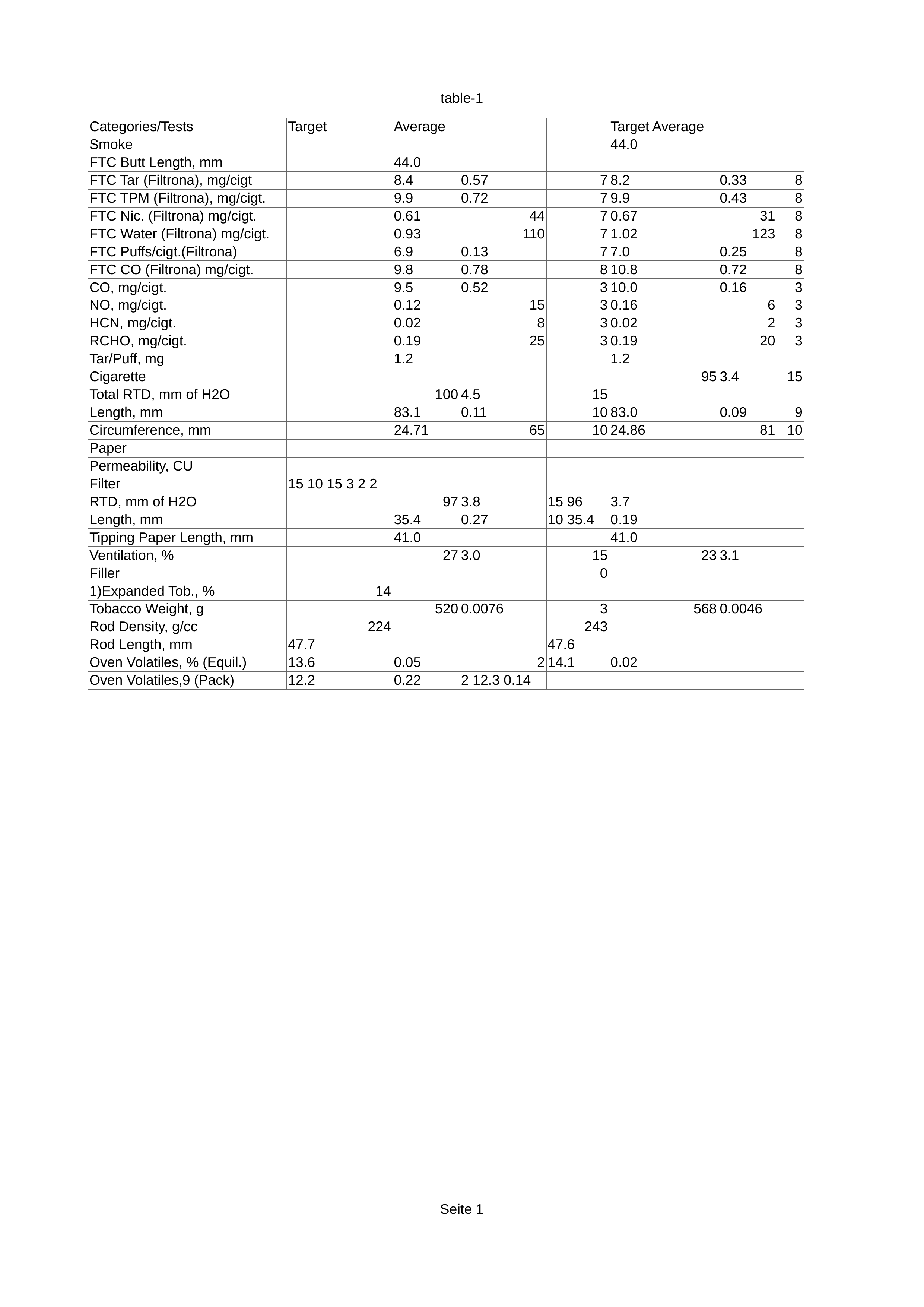

Again, we have different options with respect to the OCR output format. Apart from the ones that are also provided by Tesseract, we can additionally ask ABBYY to output XLSX spreadsheets. I'm going to use that option for our table image.

ABBYY FineReader doesn't have problems with the well-scanned email and does reasonably well on the smartphone-captured document. It fails completely on the handwritten document, though.

It's main virtue is the table extraction capacity: as you can see in the last picture, the output preserves the tabular structure. A closer look into the XML output reveals that FineReader indeed recognizes the table sections and the individual cells, and even extracts details such as font style (see here for a description of ABBYY's XML scheme).

Google Cloud Vision

Next in line is Google Cloud Vision which we are going to use via the API. Just like FineReader, it is a paid service (pricing).

Using the Cloud Vision API is a bit more tricky than using ABBYY's API or Tesseract. To learn how it works, you find good starting points here and here.

We get the following output:

Google does well on the scanned email and recognizes the text in the smartphone-captured document similarly well as ABBYY. However it is much better than Tesseract or ABBYY in recognizing handwriting, as the second result image shows: still far from perfect, but at least it got some things right. On the other hand, Google Cloud Vision doesn't handle tables very well: It extracts the text, but that's about it.

In fact, the original Cloud Vision output is a JSON file containing information about character positions. Just as for Tesseract, based on this information one could try to detect tables, but again, this functionality is not built in.

Note that there is also a Google Document Understanding AI beta version out now, which we haven't tested as of this point.

Amazon Textract

Our last candidate is also a paid cloud-based solution (pricing).

For testing purposes, you can use Textract conveniently with the drag-and-drop browser interface, but for production-ready applications you will probably rather want to use the provided API.

Using the browser interface, Textract outputs

the API response as a JSON file,

the raw text,

detected tables in separate CSV files,

key-value pairs (interpreting the input as a form), as well as a CSV file.

These give us the following results:

Like before, the email looks good, but apparently Textract doesn't handle handwritten texts very well. Furthermore, although the smartphone-captured document looks ok at first sight, a closer inspection reveals that Amazon's OCR mixed up the lines (due to the curvature of the document image).

For the tabular document we only show one of the three tables Textract identified. But it's already visible that some column headers are missing and some numbers are in the wrong places.

Conclusion

This table sums up the results of our tests:

OCR tool |

Ideal document image |

Handwriting |

Smartphone-captured |

Table extraction |

Tesseract OCR |

Acceptable |

Bad |

Bad |

Bad |

ABBYY FineReader |

Good |

Bad |

Good |

Good |

Google Cloud Vision |

Good |

Acceptable |

Good |

Bad |

Amazon Textract |

Good |

Bad |

Acceptable |

Acceptable |

The main takeaways in words:

If you deal with machine-written and well-scanned documents, or maybe PDF files lacking metadata, then Tesseract OCR might do the job, although the commercial services are more reliable.

If recognition of handwritten characters is important for you, Google Cloud Vision is your only viable option among the tested ones as of today.

If the document image quality is bad, both ABBYY FineReader and Google Cloud Vision still do a good job.

If your aim is to extract tabular information, you might want to choose ABBYY FineReader.

If you are interested in reading about a project where we used OCR, you can do that here: Automatic Verification of Service Charge Settlements.