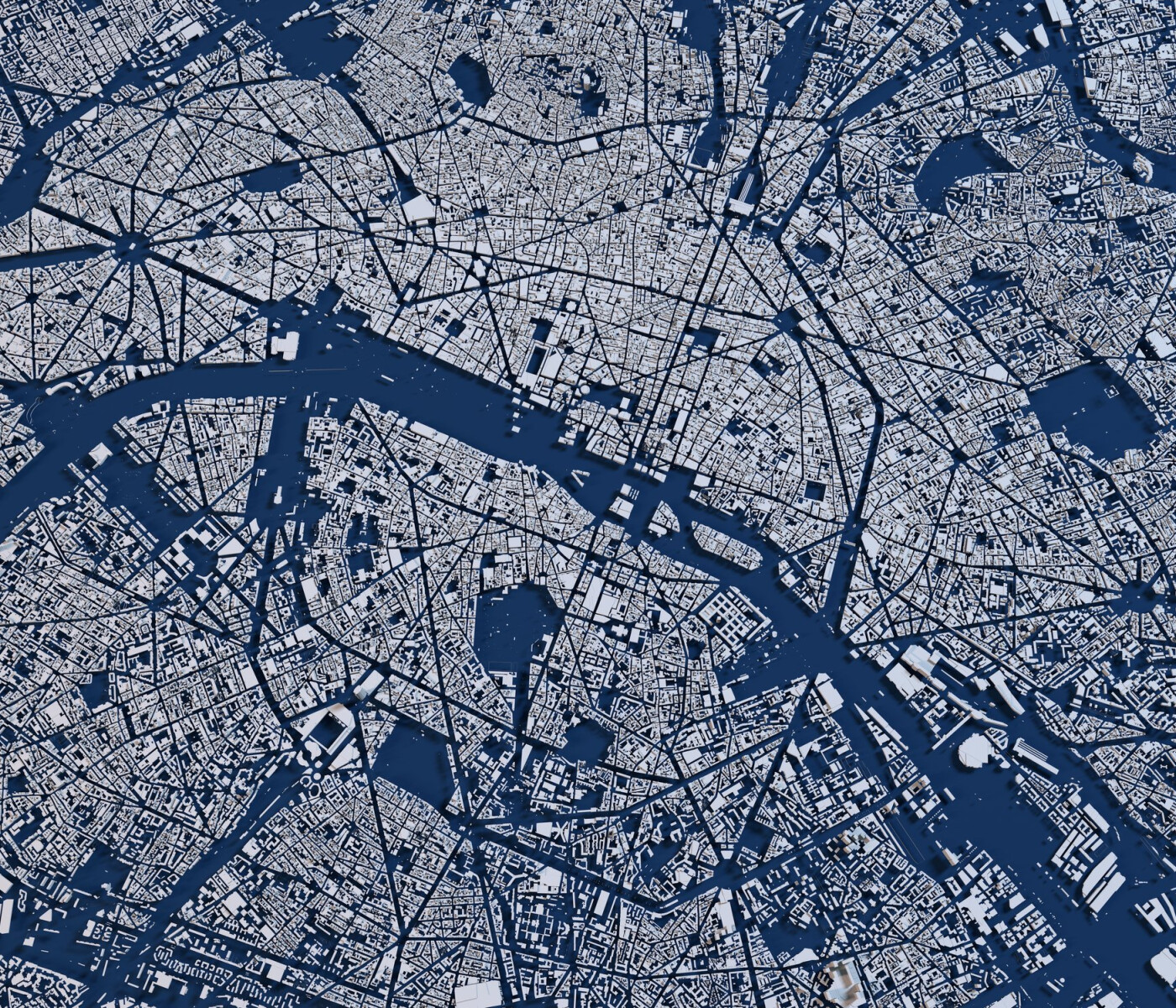

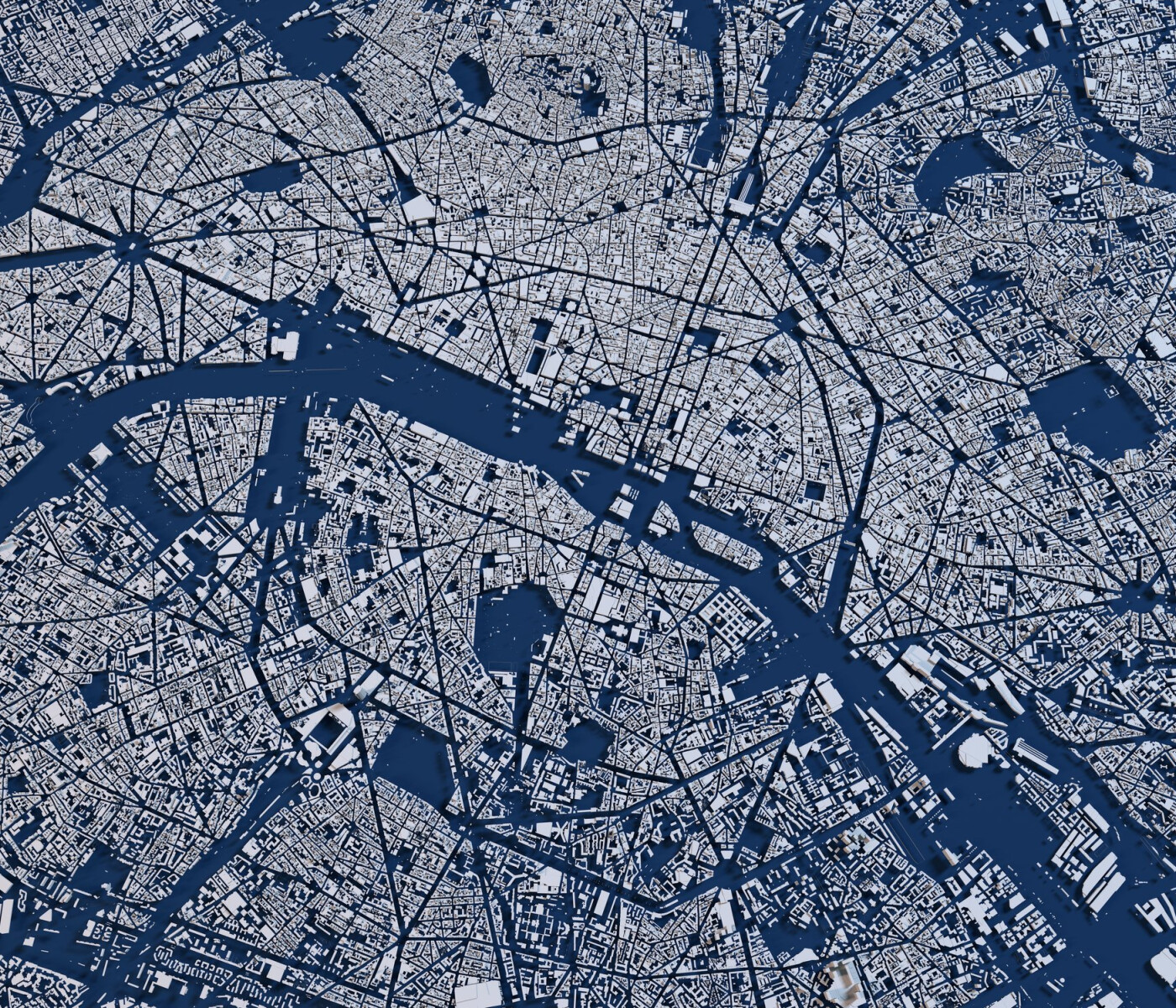

Remote sensing (analysis of satellite or aerial images) is a great application of deep learning because of the huge amount of data available. ESA’s archive of Sentinel imagery contains ~10PB for example, approximately 7000 times the size of ImageNet!

Unfortunately, there is a small catch here: labels for remote sensing images are often extremely difficult to produce. For example in our Convective Clouds detection project it took expert advice and the use of external radar data to perform labelling in many cases.

One way to take advantage of the enormous amount of unlabelled data is to pretrain on a task that we can easily generate labels for. Hopefully, by doing this our neural network model can learn features that are also useful for the main task that we are interested in. This approach is also known as transfer learning.