CLIP: Mining the treasure trove of unlabeled image data

Fabian Gringel

Digitization and the internet in particular have not only provided us with a seemingly inexhaustible source of textual data, but also of images. In the case of texts, this treasure has been lifted in the form of task-agnostic pretraining by language models such as BERT or GPT-3. Contrastive Language-Image Pretraining (short: CLIP) now does a similar thing with images, or rather: the combination of images and texts.

In this blog article I will give a rough non-technical outline of how CLIP works, and I will also show how you can try CLIP out yourself! If you are more technically minded and care about the details, then I recommend reading the original publication, which I think is well written and comprehensible.

Background

Notwithstanding the tremendous progress achieved in the area of machine and deep learning in the last decade, one point of criticism persists: even the top performing state-of-the-art models still learn terribly inefficiently.

Consider computer vision, in particular image classification: where a human needs one example image or maybe a handful to learn a visual concept and recognize it in new instances, ML models often need thousands to achieve human-like performance.

The problem is not that there wouldn’t be enough data floating around to feed the ML models with, but that for most training tasks we must label it first - and this is usually very costly.

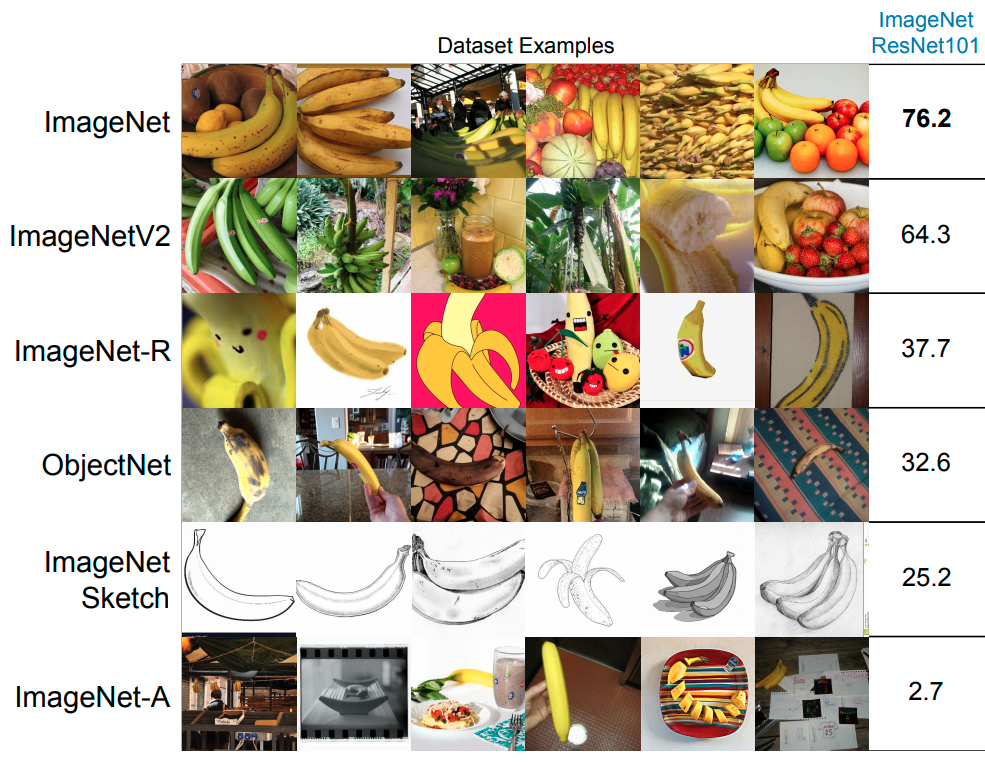

But even if a crowd of manual labelers makes the effort to produce a huge annotated dataset like ImageNet (14 million labeled images) and we train our best models on it and indeed achieve human-level accuracy or even surpass it - then, it has been shown, the models still perform very poorly on data sets coming from slightly different distributions (for example, image classification models trained on photos often don’t recognize sketches of objects) and can be easily fooled by adversarial examples.

In a nutshell: even the largest manually labeled data sets that we have are too small to make models trained on them generalize well. There are two possible solutions to this problem: Either develop better models, or provide even much bigger and more diverse datasets. It is the latter that CLIP takes a shot at.

Masked Language Modeling

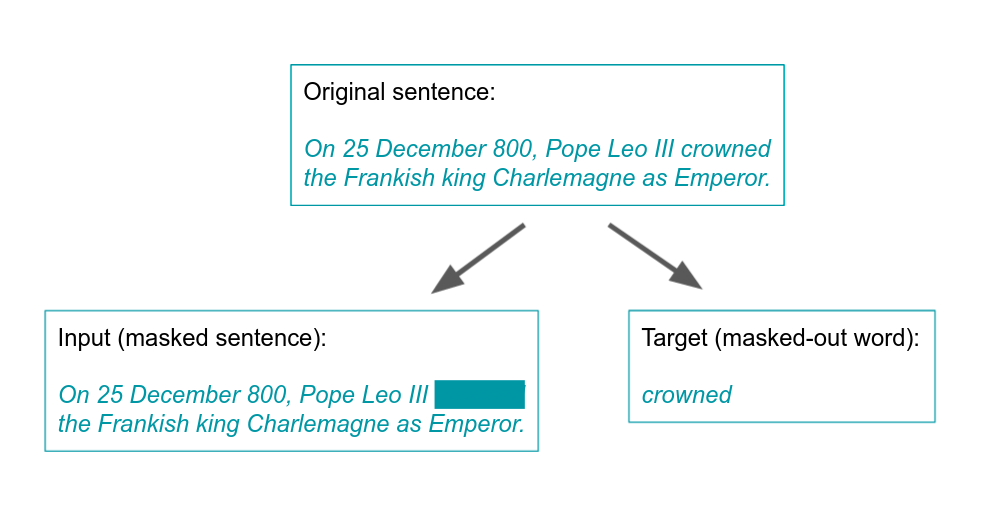

In the context of natural language processing tasks, it is already common to take advantage of the masses of (unlabeled!) textual data that is available in digitized form (e.g. Wikipedia articles, digitized books).

The trick is to generate labels automatically. One way to do this is masked language modeling. It comes in different variations, but a simple version would be to cut the text into convenient smaller chunks, e.g. sentences, and mask out one word per sentence. The task is then to guess the masked-out word:

This procedure allows to define a supervised task NLP models like BERT can be trained on, even though there are no manual labels.

All of this wouldn’t be very interesting if the models trained in this way were only good for predicting masked words. But it turns out that along the way, as it were, they often learn meaningful word embeddings, i.e. such embeddings that encode statistical semantic relations, which are incredibly useful for all kinds of NLP tasks.

CLIP - the basic idea

The basic idea behind CLIP is quite simple. Just like language models can be trained without manual supervision on the huge amounts of texts available on the internet, CLIP does a similar thing with images.

This works as follows:

Scrape the web to obtain a dataset of images, each with a short text, preferably a caption, describing the content of the image (for example just use the titles or alt texts).

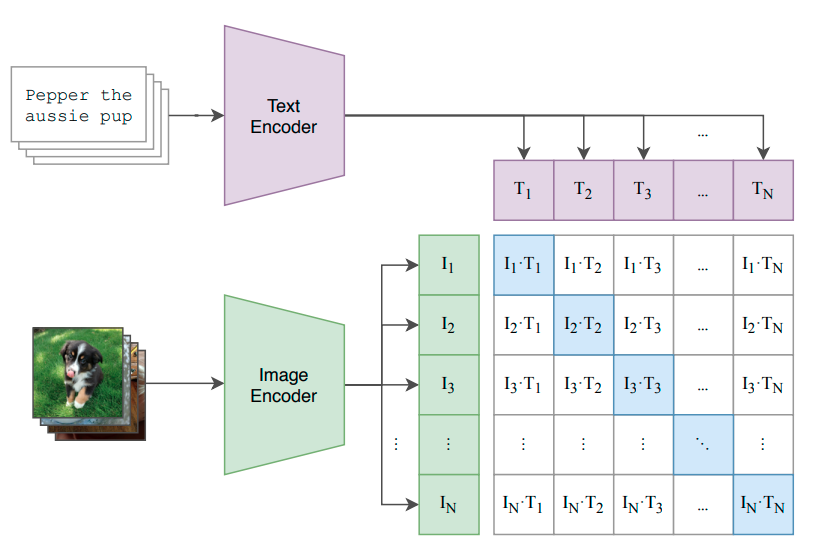

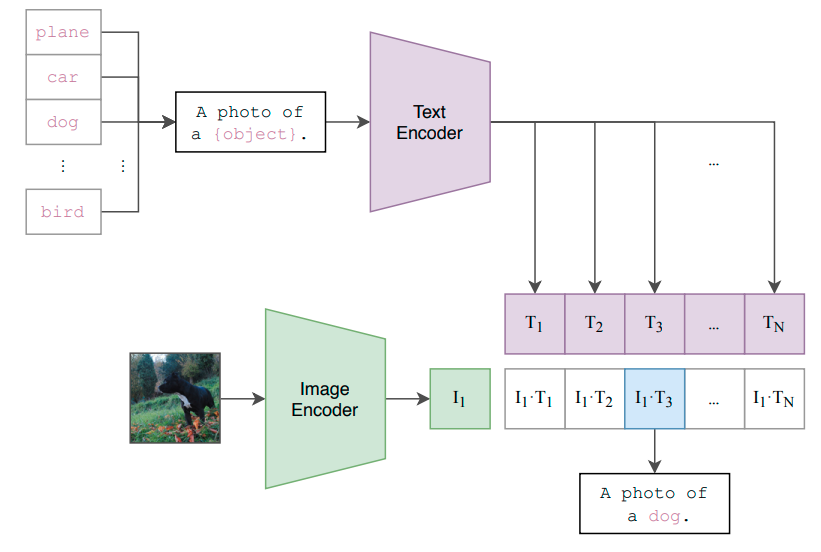

Set up a model that consists of a text encoder (e.g. a Transformer) and an image encoder (e.g. a ResNet or a Vision Transformer) embedding their inputs into a shared latent space, and outputs the similarity of the two embeddings (e.g. via cosine similarity).

Generate input-target pairs by defining the input as an image together with a random choice of an accompanying text, and make the target 1, if it is the text the image was originally accompanied by, and 0 if not.

Train the model to output a high similarity score (≈1) for image-text pairs that originally belong together, and low scores (≈0) for those that do not.

In other words, CLIP assumes that we do have useful information about images scraped from the web that we can leverage to define a supervised pretraining task. Image captions are similar to class labels in image classification tasks, with an obvious caveat: For the latter we have a fixed, usually relatively small number of discrete classes. By contrast, image captions rather resemble a continuous space of classes. To make sense of this space and make its elements comparable to images, CLIP relies on a text encoder. The latent space makes images commensurable with texts, e.g. in the latent space we can compare how closely the content of an image resembles what some text describes.

The said difference between captions and class labels is also the reason why CLIP does not define the training objective as generating the caption from the image (which would be the closest thing you could have to predicting the class), but uses the described “contrastive” training objective, i.e. predicting whether a given caption belongs to a given image or not. It would be too difficult to pinpoint the exact caption in continuous caption space.

CLIP yields more than just a useful pretraining task, though, as we will see in the next section.

Zero-shot learning

Zero-shot learning refers to the ability of a pretrained model to perform tasks it was not trained on without seeing any examples for the new tasks beforehand (seeing zero examples, hence the name).

The CLIP model is in fact a zero-shot image classifier that can be adjusted by an “instruction”. If we input the string “A dog with pointy ears.” into the text encoder, the model will start looking for images showing dogs with pointy ears. And if you rather want to classify images of fat red cats, just tell the model to.

In fact, it is easy to try this out in practice. Thanks to OpenAI and Hugging Face you just need the following lines of code:

from PIL import Image

import requests

from transformers import CLIPProcessor, CLIPModel

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

urls = [

"https://upload.wikimedia.org/wikipedia/commons/4/4c/Blackcat-Lilith.jpg",

"https://cdn.pixabay.com/photo/2016/11/08/18/10/dog-1809044_960_720.jpg",

"https://upload.wikimedia.org/wikipedia/commons/7/73/Lion_waiting_in_Namibia.jpg",

"https://live.staticflickr.com/65535/51018988161_f0ce02d495_b.jpg",

"https://upload.wikimedia.org/wikipedia/commons/2/29/Big_Fat_Red_Cat.jpg"

]

images = [Image.open(requests.get(url, stream=True).raw) for url in urls]

inputs = processor(text=["A photo of a fat red cat.", "Not a photo of a fat red cat."], images=images, return_tensors="pt", padding=True)

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)The URLs belong to images I chose because I thought they would be difficult to tell apart from a real "photo of fat red cat". The task for CLIP is then to judge whether these images are described better by "A photo of a fat red cat." or "Not a photo of a fat red cat." (this specific negation might not be the best way of defining the "something else" class, feel free to try out other prompts). CLIP outputs two similarity scores for each image, corresponding to the two classes, which we can easily interpret as probabilities by taking the softmax.

These are the results:

It turns out we were able to fool CLIP with the dog, but with none of the other images - not even the slim red cat.

CLIP was not trained on this specific task - probably it has never encountered the caption “A photo of a fat red cat.” in the training set. Still it knows what images to look for, because via the text encoder it has an understanding of the words (and how they relate to each other) appearing in the captions.

More systematically, we can turn a pre-trained CLIP model into an image classifier by turning all classes into captions, e.g. via “A photo of a [class name]”. For a given photo, we compute the cosine similarities of the image’s encoding with all caption encodings and pick the highest-scoring caption (=class) as the classifier’s prediction.

If it’s not photos that we are classifying but sketches or even various types of images, we can adapt the captions, of course.

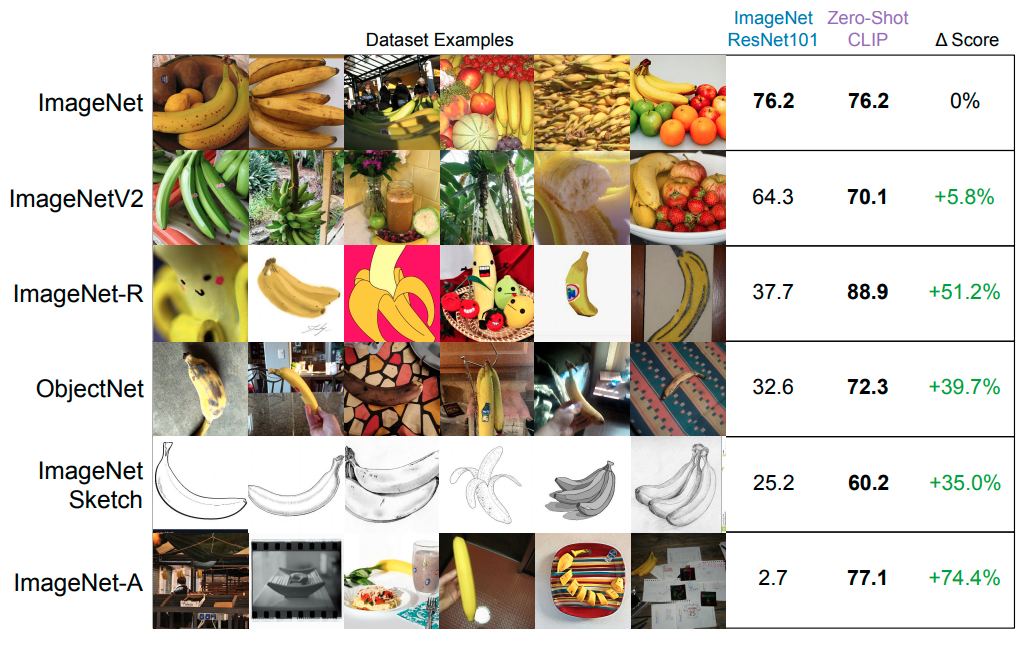

It turns out that even on specific “narrow” datasets like ImageNet generic CLIP-based classifiers perform similarly well as those especially fit to these datasets. What is more important, CLIP-based classifiers seem to be much more robust with respect to distribution changes and adversarial examples:

The reason for CLIP’s robustness seems to be that it has already seen more diverse examples during training. In fact, also CLIP generalizes poorly to types of images which were not covered in its training set.

There are more examples of direct applications of CLIP or of more complicated architectures built on top of it. One particularly interesting case is StyleCLIP, which employs CLIP to create a text-based interface for image manipulation.

Conclusion

There are three aspects of the CLIP model and its applications that I find especially worth noting:

CLIP delivers on the promise of “big data”, i.e. the promise that we just need sufficiently large data sets to solve many problems. Often data without labels is worthless, and generating them is costly. CLIP repeats the recent successes of unsupervised (at least not manually supervised) pre-training for NLP in the area of computer vision.

The idea behind CLIP is incredibly simple, and still it is a step in the direction of a more general kind of AI. Key to CLIP’s performance is the existence of powerful image and text encoders with the capacity to effectively fit huge training sets - and of course the hardware to do this in a reasonable amount of time.

Last but not least CLIP showcases the potential for natural language interfaces which we can use to “communicate” with a model and thus adapt the task performed by it.

So much for a high-level outline of CLIP. I once again recommend having a look at the original paper, which makes a lot of what I have mentioned more technically precise. Another useful resource is OpenAI’s own blog article, which is also slightly more technical than what I have discussed here.