Extracting information from documents

Frank Weilandt (PhD)

There is a growing demand for automatically processing letters and other documents. Powered by machine learning, modern OCR (optical character recognition) methods can digitize the text. But the next step consists of interpreting it. This requires approaches from fields such as information extraction and NLP (natural language processing). Here we go through some heuristics how to read the date of a letter automatically using the Python OCR tool pytesseract. Hopefully, you can adapt some ideas to your own project.

Input data

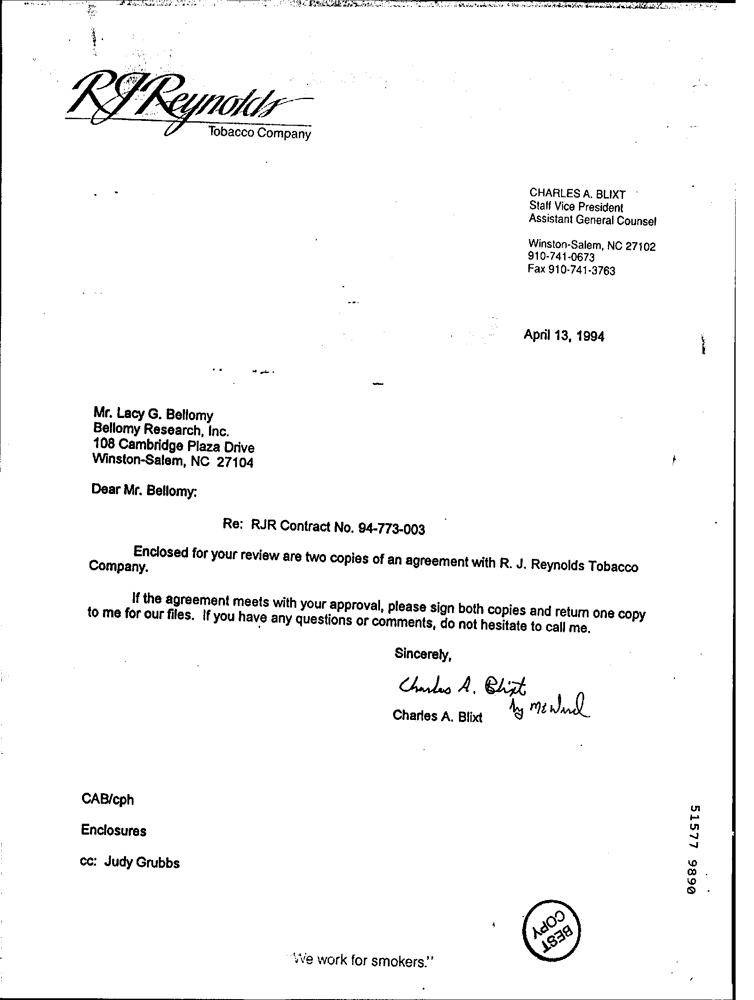

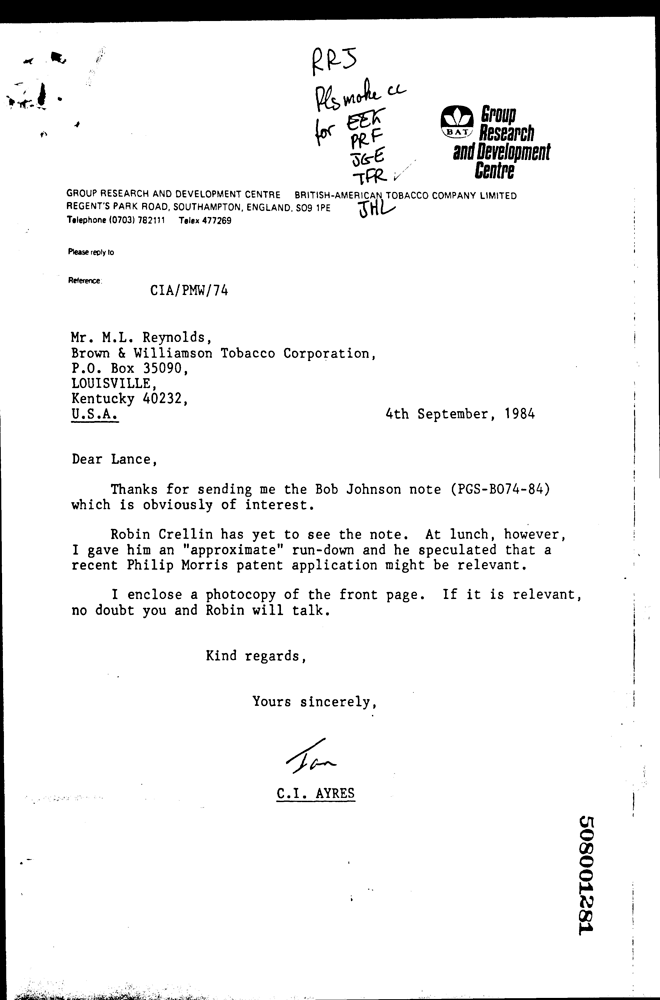

It is not easy to find publicly available scanned documents to test the approach. We used the data set RVL-CDIP. It contains several types of documents -- one type is letter. These documents are part of a large corpus of Tobacco industry documents.

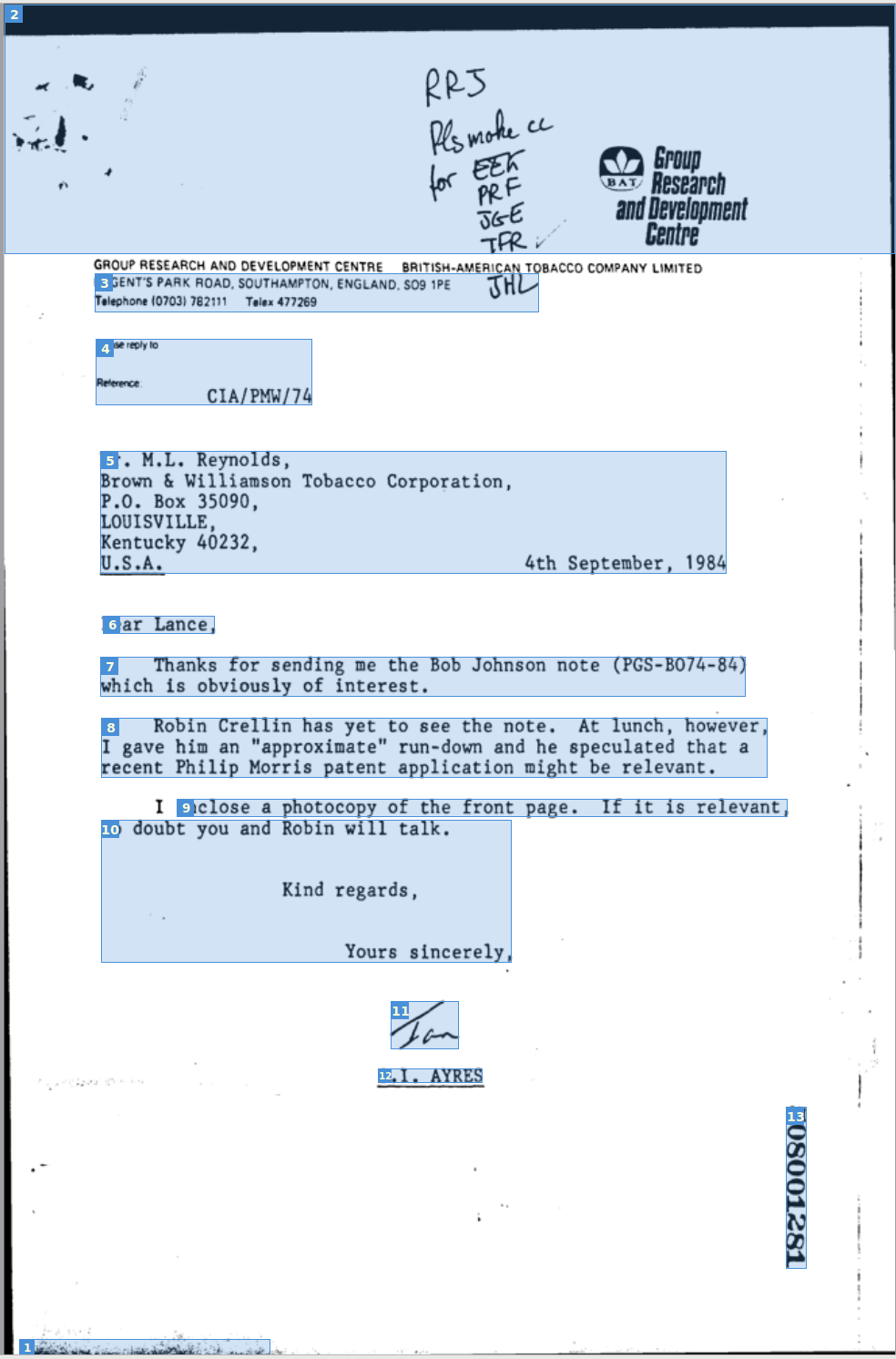

Each image is 1000 pixels high. The widths of the images differ. Typical letters look like this (original resolution is 754x1000 and 600x1000 pixels, respectively).

These image files are challenging because the height of 1000 pixels is rather low. A letter on paper is 11 inches high. Hence, our files have 1000/11=91 DPI (dots per inch). Modern OCR methods usually recommend 300 dpi for the input image. Therefore the OCR will get some letters and digits wrong.

Approach

The algorithm looks for phrases that look like a date. Then it picks the one which appears in the highest position in the document. In the corpus we used, almost every date contained the month written as a word (e.g. April), the day written in digits (13) followed by the year (1994). Sometimes, the day was printed before the month (e.g. 4th September, 1984). The algorithm looks for the patterns M D Y and D M Y where M is a month given as a word, D is a number representing the day and Y a number representing a year.

Software Tools

Our implementation runs in a Jupyter Notebook with Python 3. We use Tesseract version 4, for doing OCR through the wrapper pytesseract. Since the software sometimes gets a letter of the month wrong (e.g., duly instead of July), we accept all strings which almost look like a month in the sense that only a few letters need to be changed to reach a valid month. The number of these operations is called the Levenshtein distance, a common string metric in natural language processing (NLP). For example, the Levenshtein distance of duly and July is 1. Similarly for Moy, Septenber or similar errors. We use python-Levenshtein. For detecting numbers (years and days), we use regular expressions. We process all the tables in Pandas and use tqdm to have a neat progress bar.

Algorithm step by step

First we import all the packages needed and enter where the image files from our scans are saved.

import os

import re

import Levenshtein

import pandas as pd

import pytesseract

from tqdm import tqdm_notebook

image_dir = 'images'

ocr_dir = image_dir + '_ocr'OCR

We run the OCR on each image. The function pytesseract.imagetodata creates a table with tabular-separated values. Since this step can take several seconds for each document, we write this information to disk. It also contains the position of each word which was read.

image_files = os.listdir(image_dir)

if not os.path.isdir(ocr_dir):

os.mkdir(ocr_dir)

print('Writing output of OCR to directory %s' % ocr_dir)

for f in tqdm_notebook(image_files):

image_file = os.path.join(image_dir, f)

info = pytesseract.image_to_data(image_file, lang='eng')

tsv_file = os.path.join(ocr_dir, os.path.splitext(f)[0]+'.tsv')

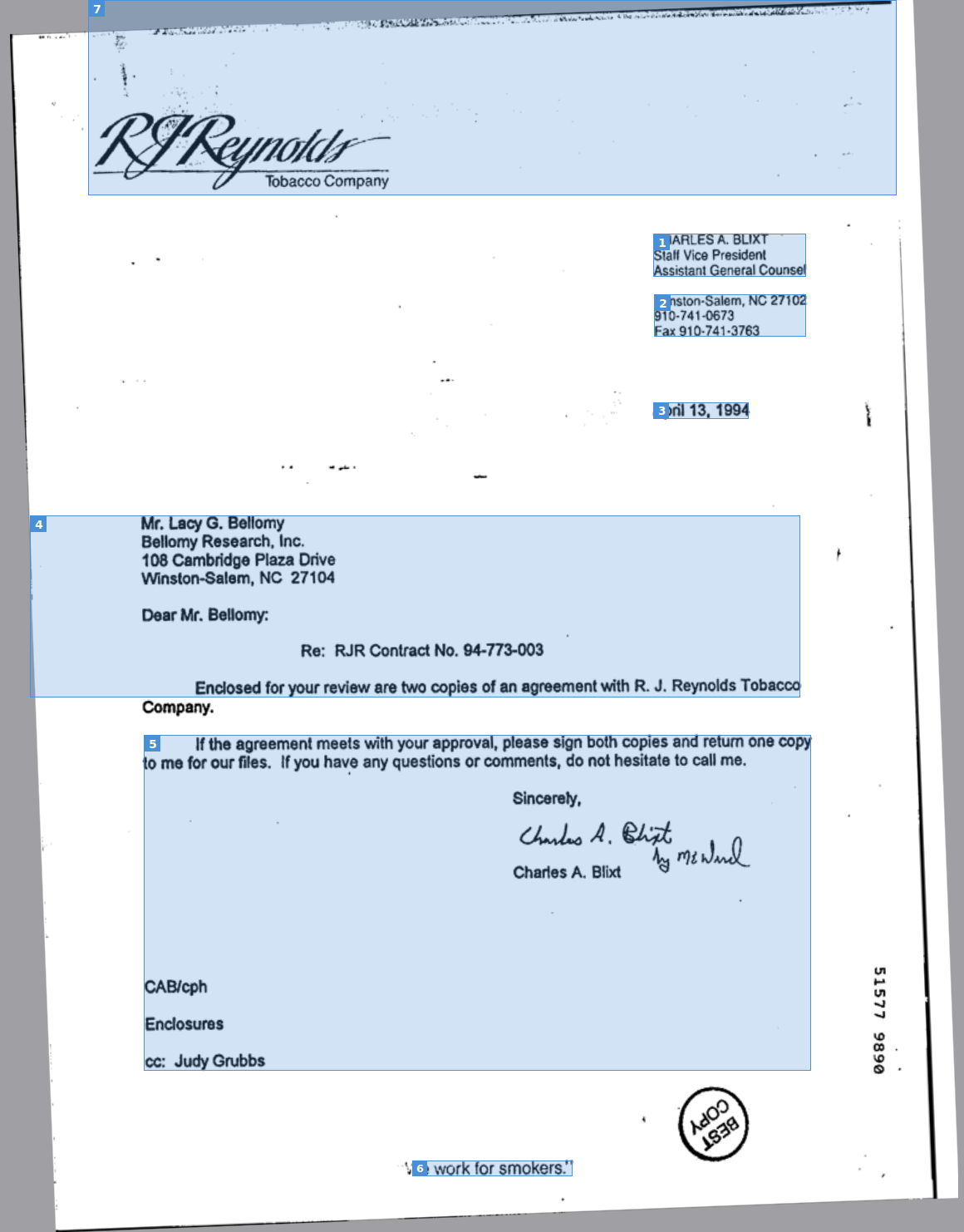

with open(tsv_file, 'wt') as file:

file.write(info)The files we just generated contain more information than just the plain text. Tesseract subdivides the text into blocks, paragraphs, lines and words. For each word, it also gives the bounding box for this word. For example, the attribute top of a word contains the position in pixels counted from the top of the page. To get a quick impression of the output of Tesseract, we use gImageReader, a front-end for Tesseract. Each blue box represents a block detected by Tesseract, the result of layout analysis. Note that the date is not always recognized as a separate block.

Finding the closest month

The following function checks whether a given string looks similar to a month. For each word, it returns the month (as a digit) which is most similar and the Levenshtein distance to this month.

month_strings = ['January', 'February', 'March', 'April', 'May', 'June', 'July','August', 'September', 'October', 'November', 'December']

def closest_month(word):

df = pd.DataFrame({'month': month_strings})

df['dist'] = df['month'].apply(lambda month: Levenshtein.distance(word, month))

idxmin = df['dist'].idxmin()

return (idxmin+1, df.loc[idxmin, 'dist'])An example that appeared:

closest_month('Cetober')yields

(10, 2)The month October was recognized (first component of the output), and the Levenshtein distance between the misspelled word and the correct spelling is 2.

Finding string triples which look like a date

The following function uses the complete output from the OCR and returns the dataframe df_year, which lists all the occurences of dates in the document. For each date, we also explicitly save the day and month. At first, df_dates lists all the years. We accept a string as representing a year if it matches the regular expression ^[12]\d{3}$, i.e., if the string consists of only a digit 1 or 2 followed by exactly three digits. Then we remove the years which do not have the name of a month in front of them. The dataframe df_months first contains the two words (strings) right in front of the year and then keeps only the strings with a Levenshtein distance of at most 2 to the closest month.

def find_dates(df_complete):

mask_year = df_complete['text'].str.match(r'^[12]\d{3}$')

df_dates = df_complete[mask_year]

df_dates = df_dates.assign(month=0, day=0)

for idx in df_dates.index.values:

df_months = df_complete.loc[idx-2:idx-1].copy()

if idx-2 not in df_complete.index.values:

continue

if idx-1 not in df_complete.index.values:

continue

df_months['month'] = df_months['text'].apply(lambda x: closest_month(x)[0])

df_months['distance'] = df_months['text'].apply(lambda x: closest_month(x)[1])

df_months = df_months[df_months['distance'] <= 2]

if not df_months.empty:

idx_month = df_months['distance'].idxmin()

df_dates.loc[idx, 'month'] = df_months.loc[idx_month, 'month']

idx_day = [idx-2, idx-1]

idx_day.remove(idx_month)

idx_day = idx_day[0]

match_digits = re.search(r'\d+', df_complete.loc[idx_day, 'text'])

if match_digits is not None:

df_dates.loc[idx, 'day'] = match_digits.group()

df_dates = df_dates[df_dates['month'] > 0]

return df_datesPutting it all together

Now we can finally combine all this to iterate over all the image files containing the documents. The resulting table will contain one line for each document. Our notebook processes about 20 tsv files per second.

ocr_files = sorted(os.listdir(ocr_dir))

df_extracted_dates = pd.DataFrame(columns=['date_string', 'year', 'month', 'day'], index=ocr_files)

for file in tqdm_notebook(ocr_files):

tsv_file = os.path.join(ocr_dir, file)

df = pd.read_csv(tsv_file, sep=r'\t', dtype={'text': str}, engine='python')

df = df[df.conf>-1] # remove empty words

df.dropna(subset=['text'], inplace=True)

if df.empty:

continue

dates_found = find_dates(df)

if not dates_found.empty:

idx_topmost_date = dates_found['top'].idxmin()

df_extracted_dates.loc[f, 'month'] = dates_found.loc[idx_topmost_date, 'month']

df_extracted_dates.loc[f, 'day'] = dates_found.loc[idx_topmost_date, 'day']

df_extracted_dates.loc[f, 'year'] = dates_found.loc[idx_topmost_date, 'text']

day_string = dates_found.loc[idx_topmost_date, 'day']

date_string = df.loc[idx_topmost_date-2:idx_topmost_date, 'text'].str.cat(sep=' ')

df_extracted_dates.loc[f, 'date_string'] = date_string

output_file = image_dir + '_results.csv'

df_extracted_dates.to_csv(output_file)Example output

Our notebook generates the following output file given the images from above and some others. A row remains empty if the algorithm could not find a date.

date_string |

year |

month |

day |

|

0000.tsv |

"April 13, 1994" |

1994 |

4 |

13 |

0001.tsv |

"Noveaber 15, 1971" |

1971 |

11 |

15 |

0002.tsv |

"Cetober 31, 1994" |

1994 |

10 |

31 |

0003.tsv |

"January 25, 1985" |

1985 |

1 |

25 |

0004.tsv |

||||

0005.tsv |

"4th September, 198 |

1984 |

9 |

4 |

Summary

While implementing this solution, we observed the following:

When the quality of the OCR is not optimal, but we know what words we look for, then the Levenshtein distance is more useful than a strict regular expression. It seems pretty difficult to represent "allow two letters to be wrong" as a regular expression. But we want to find misspellings like

Cetober.Most failures of the algorithm were due to failures of the OCR. Using Google Cloud Vision, one would get better results. But we kept our approach since it is completely open source.

Finding other information like the name of the recipient is usually more challenging because it cannot be detected by such a simple pattern like

D M Yabove. Stay tuned to our blog to learn about more advanced natural language processing methods.

Want to read more? We already did projects that implement information extraction: The automatic verification of service charge settlements and the automatic legal review of rental contracts.