Managing layered requirements with pip-tools

Augusto Stoffel (PhD)

When building Python applications for production, it's good practice to pin all dependency versions, a process also known as “freezing the requirements”. This makes the deployments reproducible and predictable. (For libraries and user applications, the needs are quite different; in this case, one should support a large range of versions for each dependency, in order to reduce the potential for conflicts.)

In this post, we explain how to manage a layered requirements setup without forgoing the improved conflict resolution algorithm introduced recently in pip. We provide a Makefile that you can use right away in any of your projects!

The problem

There are many tools in the Python ecosystem to manage pinned package lists. The simplest, perhaps, is the pip freeze command, which prints a list of the currently installed packages and their versions. Its output can be fed to pip install -r to install again the same collection of packages.

A more sophisticated approach building on this idea is provided by the pip-compile and pip-sync tools from the pip-tools package. The process is summarized in this picture:

So if, say, requirements.in contains

torch

pytest

jupyterthen the resulting requirements.txt will contain a list of all dependencies of these packages, plus the dependencies of the dependencies, and so on — all with compatible version choices.

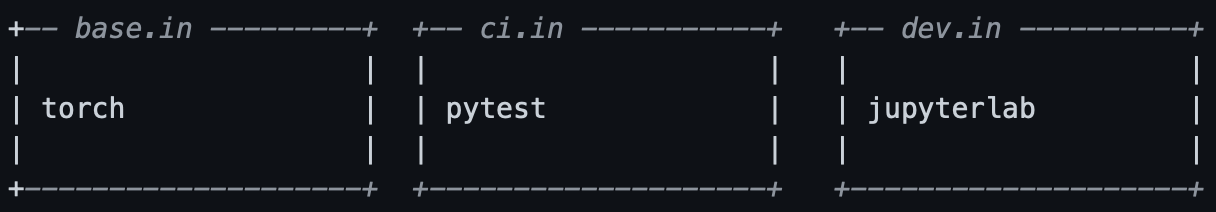

Now, let's assume that pytest and jupyter aren't really needed in production; typically, the latter is only needed for local development and the former is needed for the CI as well as local development. So we would split our requirements into three files:

In production, we only need to install the “base” requirements; on the CI, we need the base as well as the CI set; and for local development, all three requirement sets are needed.

The pip-tools documentation already suggests a procedure to compile the above .in files into a collection of pinned .txt requirements. The idea is to first compile base.in, then use base.txtin conjunction with ci.in to produce ci.txt, and so on.

The problem with this approach is that PyTorch and Pytest have dependencies in common; when we compile base.in with no regard to ci.in, we may end up selecting a package version that conflicts with Pytest. As a project (and its list of requirements) grows, the potential for conflicts and the effort needed to manually resolve them grow exponentially (literally!).

The solution

Here is a Makefile that allows you to set up a layered requirements setup and still take advantage of automatic conflict resolution. We assume it's placed in the requirements/ directory of your project, next to base.in, ci.in, dev.in files similar to the above.

## Summary of available make targets:

##

## make help -- Display this message

## make all -- Recompute the .txt requirements files, keeping the

## pinned package versions. Use this after adding or

## removing packages from the .in files.

## make update -- Recompute the .txt requirements files files from

## scratch, updating all packages unless pinned in the

## .in files.

help:

@sed -rn 's/^## ?//;T;p' $(MAKEFILE_LIST)

PIP_COMPILE := pip-compile -q --no-header --allow-unsafe --resolver=backtracking

constraints.txt: *.in

CONSTRAINTS=/dev/null $(PIP_COMPILE) --strip-extras -o $@ $^

%.txt: %.in constraints.txt

CONSTRAINTS=constraints.txt $(PIP_COMPILE) --no-annotate -o $@ $<

all: constraints.txt $(addsuffix .txt, $(basename $(wildcard *.in)))

clean:

rm -rf constraints.txt $(addsuffix .txt, $(basename $(wildcard *.in)))

update: clean all

.PHONY: help all clean updateTo use this, you should also add the line

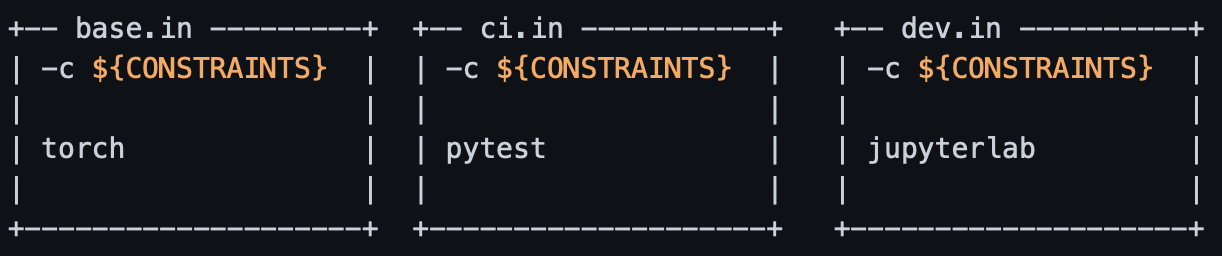

-c ${CONSTRAINTS}at the top of each .in file. So our simple example now looks like this:

Now let's go through our Makefile line by line. The help target just prints the commentary at the top of the file. Next, we define the PIP_COMPILE variable to save typing. You can adjust the switches as desired here, for instance, to add hash checks. Note that the --allow-unsafe option is not unsafe at all, and will become the default in a future version of pip-compile.

The first actually interesting lines read:

constraints.txt: *.in

CONSTRAINTS=/dev/null $(PIP_COMPILE) --strip-extras -o $@ $^What this does is to compute a constraints.txt file using as input all the .in files of the project. The essential point here is that pip's resolver gets a chance to see all packages in the project at once. The CONSTRAINT=/dev/null setting is a trick to effectively ignore the -c ${CONSTRAINTS} directive we added to the top of our .in files. The Makefile syntax $@ expands to the target file name, in this case contraints.txt, and $^ expands to its prerequisites, in this case all .in files.

Next, we have

%.txt: %.in constraints.txt

CONSTRAINTS=constraints.txt $(PIP_COMPILE) --no-annotate -o $@ $<This is saying that in order to produce <file>.txt, we need as input <file>.in and constraints.txt. The Makefile syntax $< used here expands to the first prerequisite of the make target. Therefore, the command to produce <file>.txt is, essentially,

pip-compile -o .txt .in but additionally, we make sure that that pip sees the -c constraints.txt directive at the top of <file>.in. (As an aside, pip-compile has a --pip-args command line option that might seem to provide an alternative way to achieve this; unfortunately, it doesn't work here because pip is not invoked as a subprocess.)

Next, the line

all: constraints.txt $(addsuffix .txt, $(basename $(wildcard *.in)))says that make all means to produce <file>.txt (using the above recipe) for each <file>.inin our requirements directory. If some .txt files already exist, the pinned versions listed in them are not updated -- this is the usual and desired pip-compile behavior. You should run this every time you add or remove something from some of the .in files but wish to keep all other package versions unchanged.

The make clean command removes all .txt files that come from some .in file.

Finally, make update is just like make all, but first forgets all our pinned version numbers. The end effect is to update each requirement to the most recent viable version.

Bonus: getting the most efficient precompiled PyTorch version

Some packages involve special packaging challenges, and one nice thing about our customized approach is the flexibility to deal with these edge cases.

Take PyTorch, for instance. If using a GPU, you need to get a version matching your CUDA version. If working on a CPU, you can save a good deal of bandwidth and disk space by getting the CPU-only version. Each of these variants is available through a dedicated PyPA index URL.

As of PyTorch version 1.13, pip install torch on Linux installs the binaries compiled for CUDA 11.7. To get the CPU or CUDA 11.6 versions, you can use the following requirements files:

.png)

Note that, unlike base.txt, the two .txt files above are static and not built from .in inputs by pip-compile.

Now, to get your development environment rolling, you can type one of these commands:

pip-sync base-cpu.txt ci.txt dev.txt # If working on the CPU

pip-sync base-cu116.txt ci.txt dev.txt # If working on the GPU with CUDA 11.6

pip-sync base.txt ci.txt dev.txt # If working on the GPU with CUDA 11.7[Image of the rainbow cake by Marco Verch, found at https://ccnull.de/foto/rainbow-cake/1012324.]